Tech founder and AI engineer Elliot One shares a practical guide to building a fully local RAG system with Semantic Kernel, Ollama, and Qdrant. This hands-on tutorial shows how local document retrieval and AI reasoning can be combined to deliver accurate, private, and production-ready AI systems for real-world use.

Introducing Retrieval-Augmented Generation (RAG)

In modern AI applications, combining structured data retrieval with generative reasoning has become a critical capability. Retrieval-Augmented Generation (RAG) is a method that blends vector-based semantic search with Large Language Model (LLM) reasoning, producing responses that are not only contextually relevant but also grounded in reliable sources. This approach is particularly valuable in knowledge-intensive domains, such as legal, compliance, and technical workflows, where accuracy, transparency, and traceability are essential.

This article presents a fully local RAG system implemented in C#, demonstrating how Semantic Kernel can orchestrate embeddings, vector search, and LLM reasoning while maintaining modularity, privacy, and extensibility. The system leverages Ollama for hosting LLMs and embeddings locally and QDrant for high-performance vector search. Developers following this guide can create a production-ready, fully local RAG pipeline capable of delivering precise, context-aware answers.

Local RAG System Architecture Overview

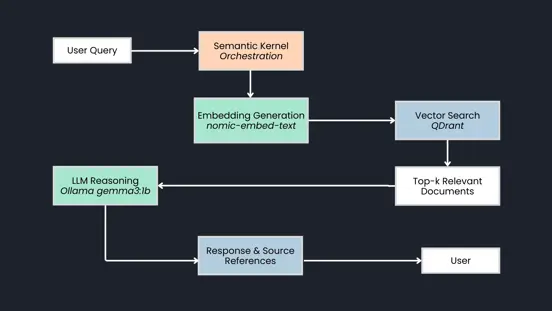

The core RAG workflow integrates four primary components:

- Embeddings: Text data is converted into vector representations using

nomic-embed-text:latest. - Vector Search:

QDrantstores these embeddings and performs efficient semantic similarity searches. - LLM Reasoning:

Ollamahosts thegemma3:1bmodel locally to generate context-aware answers. - Semantic Kernel: Acts as the orchestration layer, integrating memory, embeddings, vector search, and model calls.

The workflow can be summarised as follows: a user submits a query, Semantic Kernel orchestrates embedding generation and retrieval from QDrant, and the LLM produces a grounded response.

This architecture separates deterministic and AI-driven components, allowing high modularity and predictability while maintaining the benefits of generative reasoning.

Kernel and Memory Configuration

Semantic Kernel provides centralised orchestration for AI and memory components. The KernelMemoryBuilder class allows developers to integrate embeddings, vector storage, and LLM configurations consistently. A typical setup looks like this:

var memoryBuilder = new KernelMemoryBuilder()

.WithOllamaTextGeneration(ollamaConfig)

.WithOllamaTextEmbeddingGeneration(ollamaConfig)

.WithQdrantMemoryDb(LegalDocConfig.QdrantUrl)

.WithSearchClientConfig(

new SearchClientConfig

{

AnswerTokens = 4096

}

);

var memory = memoryBuilder.Build(

new KernelMemoryBuilderBuildOptions

{

AllowMixingVolatileAndPersistentData = true

}

);

This configuration ensures all AI calls, embeddings, and retrieval operations are coordinated through a single Kernel instance. The result is a maintainable, modular system that allows both deterministic retrieval and AI reasoning to work seamlessly together.

Document Import and Indexing

In this system, legal documents such as NDAs, employment contracts, SLAs, and DPAs are transformed into embeddings and stored in QDrant with associated metadata. This enables precise semantic retrieval during query processing. A typical import process looks like this:

var docText = $ "Title: {doc.Title}Content: {doc.Content}";

var safeTitle = Regex.Replace(doc.Title.ToLower(), @ "[^a-z0-9._-]", "-");

var documentId = $ "legal-{safeTitle}";

await memory.ImportTextAsync(text: docText, documentId: documentId, index: LegalDocConfig.IndexName, tags: new TagCollection

{

{

"title",

doc.Title

},

{

"type",

"legal-document"

},

{

"source",

"sample-legal-database"

}

});

Each document is assigned a unique identifier and enriched with metadata tags, allowing fast and accurate retrieval during query processing. By storing metadata alongside embeddings, the system preserves context, source attribution, and document type information for every query result.

Query Processing and Semantic Search

The system provides an interactive console interface for developers or end users. Queries are processed in the context of prior interactions to maintain conversational coherence. An example of how queries are handled is shown below:

var fullQuery = chatHistory.GetHistoryAsContext() + "User: " + userInput;

var answer = await memory.AskAsync(

fullQuery,

index: LegalDocConfig.IndexName,

minRelevance: 0.3f

);

Chat history is preserved to provide contextual grounding across multiple interactions:

public string GetHistoryAsContext(int maxMessages = 6) => string.Join("", _messages.TakeLast(maxMessages));

This approach ensures that responses are not only contextually aware but also grounded in specific, retrievable document references, improving both accuracy and trustworthiness.

User Experience

With this setup, users can pose detailed, domain-specific questions such as:

- “What are the key confidentiality clauses in the NDA?”

- “How can I terminate the employment contract?”

- “What is the defined uptime in the SLA?”

When a query is submitted, the system executes the following steps:

- The query is embedded using

nomic-embed-text. - Semantic search is performed in

QDrantto retrieve the most relevant document sections. - The retrieved content, along with conversation context, is passed to the local LLM running in

Ollama(gemma3:1b). - A context-aware response is generated, including references to the source documents.

This combination of deterministic retrieval and AI reasoning ensures responses are reliable, verifiable, and actionable.

Key RAG Architecture Principles

The architecture separates deterministic and AI-driven components:

Deterministic Retrieval: QDrant offers fast and reliable semantic search.

AI Reasoning: Semantic Kernel orchestrates embeddings, memory access, and LLM reasoning.

This separation improves modularity, testability, and predictability. Developers can extend or modify one component without affecting others, allowing experimentation and adaptation for other knowledge-intensive domains.

Why This Architecture Works

- Separation of concerns: Deterministic retrieval and generative AI reasoning operate independently while remaining coordinated.

- Modularity:

KernelMemorycentralises embeddings, prompts, and model calls. - Local execution: All models and vector storage run locally, ensuring data privacy and low latency.

- Extensibility: New document sources, models, or interfaces can be added with minimal changes to the core system.

RAG Setup Highlights

The system is designed for local deployment with the following setup:

- Runtime:

.NET 9.0 SDKfor development and execution. - Vector storage:

QDrantrunning locally for semantic vector search. - AI runtime:

Ollamahosting local models, includingnomic-embed-text:latestfor embeddings andgemma3:1bfor language model inference. - Code structure: A modular codebase organised into separate components, including

LegalDocumentImporter.csfor document import,LegalDocConfig.csfor configuration,LegalDocRagApp.csfor orchestration, andChatService.csfor the console interface.

Potential Enhancements

To enhance this system further, you can incorporate additional document sources to expand coverage, implement web or desktop interfaces for improved accessibility, introduce long-term memory to preserve context across sessions, apply advanced natural language query parsing and dynamic indexing pipelines, and adapt the RAG workflow for other knowledge-intensive domains such as healthcare, finance, or engineering.

Conclusion

This article demonstrated how to build a fully local RAG system combining Semantic Kernel, Ollama, and QDrant. By integrating deterministic vector search with local LLM reasoning, the system produces accurate, context-aware answers while maintaining transparency through source document references. Its modular architecture, local execution, and extensibility make it suitable for professional, production-ready applications in legal, compliance, and technical workflows.

You can explore the full source code for this local RAG system on GitHub and experiment with your own datasets to build fully local, context-aware AI solutions. By combining deterministic computation with generative reasoning, you can create AI systems that are both powerful and trustworthy.

Innerworks and Cogworks are proud to partner with Community TechAid who aim to enable sustainable access to technology and skills needed to ensure digital inclusion for all. Any support you can give is hugely appreciated.